Mark Bunting argues that the tech giants should accept ‘procedural accountability’.

The US, Germany and the UK have pretty disparate norms on business regulation, particularly when it involves free speech. So when law-makers in all three countries simultaneously consider how to regulate content on online platforms, it’s a significant moment. But the wrong kind of regulation could undermine the free flow of online information, target the wrong problems, and give platforms too much power rather than constraining them.

Google, Facebook and Twitter came under great pressure at recent congressional hearings, not just regarding Russia-backed interference, but more generally for their power, scale and perceived lack of accountability. Congress is considering draft legislation which would make websites liable for publishing material “designed to facilitate sex trafficking,” amending the long-established ‘liability exemption’ provisions of the Communications Decency Act, and the Honest Ads Act requiring disclosure for online political adverts.

The German Parliament has already passed a law requiring all platforms with over 2m registered users to delete “evidently unlawful” content once notified to them. Despite courts often taking months to determine whether content is hateful, defamatory or likely to incite violence, the platforms will have just 24 hours, or 7 days for hard cases. They face fines of up to €50m for persistently failing to take down infringing material.

The UK Government has published an Internet Safety Green Paper, which emphasises a voluntary approach, seeking to motivate tech firms to deal themselves with issues ranging from online bullying and abuse, child protection, sexting, hate crime, digital literacy and brand safety.

The German law treats platforms like publishers. This is a common, but dangerous, approach. Start from there, and the solution to undesirable content seems easy: tell ‘publishers’ what to remove and impose punitive sanctions for non-compliance.

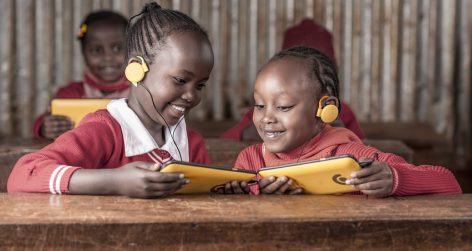

But this tackles the wrong problem. The old media policy problem was too much power concentrated in the hands of press barons and broadcasters who controlled both the production of content and its distribution. Now we have a different challenge – a surfeit of openness. Anybody can reach a global audience on Facebook, Telegram or the open web. That includes new talent, political movements, whistleblowers and campaigners – but also terrorist groups, Macedonian teenagers, sex traffickers and assorted misogynists, racists and trolls.

Regulating platforms as if they were publishers undermines the benefits of openness but does not mitigate its harms. It gives them more power, not less; when we task platforms with deciding whether content is political speech or incitement to violence; alternative perspective or fake news; violent content or evidence of war crimes; historically important imagery or pornography, we make it more likely that Facebook will become precisely the kind of tightly controlled, end-to-end media monolith that many fear.

This does not prevent illegal and harmful communication, just drives it into harder-to-reach environments. And it acts as a barrier to competition – if any platform of scale must employ thousands of content moderators and build sophisticated vetting algorithms to comply with regulatory requirements and political expectations, how will competitors to Facebook and YouTube emerge?

Platforms’ role in media markets is different and needs a different regulatory approach. As early as the late 1990s, Lawrence Lessig wrote about how ‘code’ would shape markets, with regulatory implications. That’s what platforms do – they govern markets, using nuanced combinations of policies, technology, signals and human oversight to balance users’ interests and maximise the overall value of the platform. A complaint, or asset for some cyberlibertarians, is that the new players are “lawless.” But a deeper concern may be that they are “law makers” – in the sense of code, not statute.

This is uncomfortable for some legislators, but it also creates a new opportunity. As Microsoft’s Jonathan Grudin said recently “We were in this position before, when printing presses broke the existing system of information management. A new system emerged and I believe we have the motivation and capability to do it again.” While platforms cannot practically pre-moderate the vast volumes of content uploaded to them, the power of code gives them great visibility and influence over how their markets operate, arguably more than traditional regulators. Effective regulation will recognise this power and works with it.

This means policy-makers will have to take a different approach. Online content regulation is not about specifying detailed policies, scrutinising content, imposing sanctions. It is about convening the parties who can do those things. Rather than making rules themselves, regulators must be focused on the governance of others. Are platforms’ rules and processes aligned with wider objectives? Do they measure the effect of those policies, and publish outcomes? Have they considered unintended consequences? Has due process been followed?

These responsibilities speak to what I call the ‘procedural accountability’ of platforms, as opposed to counter-productive content regulation. One example is the Code of Practice on Search and Copyright signed in early 2017 between search engines and content rightsholders. After lengthy negotiations, brokered by the UK Intellectual Property Office (IPO) and ministers, the parties agreed steps to reduce copyright infringing material appearing in search results. But Government didn’t decide the desired outcome, the parties negotiated it. The agreement includes practical commitments, measures of success and an approach to evaluation. Implementation is overseen by ministers, while the IPO commissions independent research to track progress. However, the lack of redress for websites who believe they have been wrongly classed as infringing, is a problem. All stakeholders need to be represented in procedural accountability regulation – including those who will push the case for freedom of expression, consumer interests and innovative competition.

There are similar good things in the UK government’s Internet Safety Green Paper: an emphasis on an enabling role for government, promoting the responsibility of technology companies within a voluntary approach, coordinating groups across society, and joining up across government. The proposed annual internet safety transparency report could shed valuable light on an issue where hard evidence remains sorely lacking. And if voluntarism fails, the threat of more directive regulation and sanctions is in the background.

But there are also areas of concern. The ‘comply or explain’ approach to reporting is heavy-handed, crude and should be dropped. And a levy on social media companies to support awareness-raising is likely to be less effective than the firms developing their own practical steps – as long as these are backed up by real action and resources.

This could turn out to be the defining media policy issue of Theresa May’s government. The UK, with a well-established media policy record, internationally respected regulators and – potentially – without the constraint of complex and lengthy European processes, may be well placed to lead the way in ‘procedural accountability’ regulation. But it will require flexibility, creativity and humility.

Mark Bunting is a member of Communications Chambers and a visiting associate at the Oxford Internet Institute.