Type ‘Bettina Wulff’, the name of a former German president’s wife, into Google and the autocomplete function will add ‘escort’. Is this algorithmic addition a form of defamation? Sebastian Huempfer explores the case.

The case

When Google users search for Bettina Wulff in English or German, the autocomplete function suggests they add “escort” or the German expressions for “prostitute” and “red-light” to the end of their query. These suggestions reflect widespread but unfounded rumours about the former German president’s wife, which were initially propagated by her husband’s political rivals.

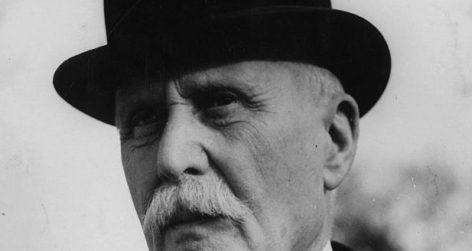

On 8 September 2012 Wulff filed a lawsuit against Google for defamation, accusing the company of “destroying [her] reputation”. Wulff also sued the well-known German TV host Günther Jauch for talking about the rumours and issued cease and desist letters to 34 publications in Germany and other countries. In a settlement, Jauch agreed to stop mentioning the rumours and courts ordered several publications to pay damages to Wulff.

Google has won five similar lawsuits in Germany and the company has so far refused to change these automatic suggestions, arguing: “search terms in Google autocomplete reflect the actual search terms of all users” and are determined algorithmically with no editorial decisions involved. Google has, however, changed autocomplete suggestions following defamation and intellectual property lawsuits in Japan, France and the UK. Autocomplete is also permanently disabled for many search terms, including “cocaine” and “schoolgirl”, to avoid displaying offensive or illegal suggestions.

On its support site, Google says autocomplete allows users to “rest [their] fingers” and easily “repeat a favourite search”. The company adds that they “apply a narrow set of removal policies for pornography, violence, hate speech, and terms that are frequently used to find content that infringes copyrights”. Autocomplete suggestions can be, and in Wulff’s case are, very different from the actual top search results.

reply report Report comment

A great piece!

Dominic’s comment reminds me of Michael Bloomberg’s recent attempt of preventing just that by buying around 400 domains that included his name, including unfavourable ones like MichaelBloombergisaWeiner.nyc or BloombergistooRich.nyc.

Unsurprisingly, this backfired as media outlets began reporting on the full list of bought domains…

reply report Report comment

If person have rightful demands on institutes’ product, it should listen what subscriber said. The attitude toward every Internet company is necessary.

After that, the company ought to think about public affects. Then, make a deeply thinking about how to balance personal privacy and fair of information.

reply report Report comment

This is a great article and a tough question to argue either way.

It gives me an idea: If I am going to run for political office I will get my campaign team to produce many websites saying how I am a nice guy. Then when people search my name it will autocomplete “Dominic Burbidge nice guy”. Who knows, maybe my political opponents will then sue Google.